Adelson Publications

Rosenholtz Publications

Adelson Publications

| Visual dexterity: In-hand reorientation of novel and complex object shapes</a > T Chen, M Tippur, S Wu, V Kumar, E Adelson, P Agrawal

Science Robotics 8 (84), eadc9244, 2023

|

2023 | |

| GelSight Svelte: A Human Finger-shaped Single-camera Tactile Robot Finger with Large Sensing Coverage and Proprioceptive Sensing</a > J Zhao, EH Adelson

2023 IEEE/RSJ International Conference on Intelligent Robots and

Systems …, 2023 |

2</a > | 2023 |

| GelSight Svelte Hand: A Three-finger, Two-DoF, Tactile-rich, Low-cost Robot Hand for Dexterous Manipulation</a > J Zhao, EH Adelson

arXiv preprint arXiv:2309.10886, 2023

|

2023 | |

| Optomechanical Method to Measure Arterial Pulse and Assess Cardiopulmonary Hemodynamics</a > EH Adelson, A Biswas, M Thanikachalam

US Patent App. 18/020,332, 2023

|

2023 | |

| Flexible optical tactile sensor</a >

E Adelson, S Liu

US Patent App. 17/665,186, 2023

|

2023 | |

| Retrographic sensors with compact illumination</a >

E Adelson, B Romero, FF Veiga

US Patent 11,703,321, 2023

|

1</a > | 2023 |

| Retrographic sensor cartridge</a >

J Rohaly, MK Johnson, N Lauder, EH Adelson

US Patent App. 29/795,021, 2023

|

2023 | |

| Tactile-Reactive Roller Grasper</a >

S Yuan, S Wang, R Patel, M Tippur, C Yako, E Adelson, K Salisbury

arXiv preprint arXiv:2306.09946, 2023

|

2023 | |

| GelSight360: An Omnidirectional Camera-Based Tactile Sensor for Dexterous Robotic Manipulation</a > MH Tippur, EH Adelson

2023 IEEE International Conference on Soft Robotics (RoboSoft),

1-8, 2023 |

3</a > | 2023 |

| GelSight EndoFlex: A Soft Endoskeleton Hand with Continuous High-Resolution Tactile Sensing</a > SQ Liu, LZ Yañez, EH Adelson

2023 IEEE International Conference on Soft Robotics (RoboSoft),

1-6, 2023 |

7</a > | 2023 |

| GelSight Baby Fin Ray: A Compact, Compliant, Flexible Finger with High-Resolution Tactile Sensing</a > SQ Liu, Y Ma, EH Adelson

2023 IEEE International Conference on Soft Robotics (RoboSoft),

1-8, 2023 |

6</a > | 2023 |

| Tactofind: A tactile only system for object retrieval</a >

S Pai, T Chen, M Tippur, E Adelson, A Gupta, P Agrawal

arXiv preprint arXiv:2303.13482, 2023

|

3</a > | 2023 |

| FingerSLAM: Closed-loop Unknown Object Localization and Reconstruction from Visuo-tactile Feedback</a > J Zhao, M Bauza, EH Adelson

arXiv preprint arXiv:2303.07997, 2023

|

2</a > | 2023 |

| Visuotactile affordances for cloth manipulation with local control</a > N Sunil, S Wang, Y She, E Adelson, AR Garcia

Conference on Robot Learning, 1596-1606, 2023</span >

|

10</a > | 2023 |

| See, hear, and feel: Smart sensory fusion for robotic manipulation</a > H Li, Y Zhang, J Zhu, S Wang, MA Lee, H Xu, E Adelson, L Fei-Fei, R

Gao, … arXiv preprint arXiv:2212.03858, 2022

|

13</a > | 2022 |

| Visual dexterity: In-hand dexterous manipulation from depth</a >

T Chen, M Tippur, S Wu, V Kumar, E Adelson, P Agrawal

arXiv preprint arXiv:2211.11744, 2022

|

30</a > | 2022 |

| Surface topography measurement systems</a >

J Rohaly, EH Adelson

US Patent App. 17/855,710, 2022

|

2022 | |

| Surface topography measurement systems</a >

J Rohaly, EH Adelson

|

2022 | |

| Gelsight fin ray: Incorporating tactile sensing into a soft compliant robotic gripper</a > SQ Liu, EH Adelson

2022 IEEE 5th International Conference on Soft Robotics (RoboSoft),

925-931, 2022 |

23</a > | 2022 |

| Cable manipulation with a tactile-reactive gripper</a >

Y She, S Wang, S Dong, N Sunil, A Rodriguez, E Adelson

The International Journal of Robotics Research 40 (12-14),

1385-1401, 2021 |

194</a > | 2021 |

| Surface topography measurement systems</a >

J Rohaly, EH Adelson

US Patent App. 17/321,868, 2021

|

2021 | |

| Retrographic sensors</a >

EH Adelson, FR Cottrell

US Patent App. 17/193,175, 2021

|

2</a > | 2021 |

| Gelsight wedge: Measuring high-resolution 3d contact geometry with a compact robot finger</a > S Wang, Y She, B Romero, E Adelson

2021 IEEE International Conference on Robotics and Automation

(ICRA), 6468-6475, 2021 |

53</a > | 2021 |

| Tactile blood pressure imager</a >

MA Srinivasan, M Thanikachalam, EH Adelson, A Biswas

US Patent App. 17/044,321, 2021

|

2021 | |

| Surface topography measurement systems</a >

J Rohaly, EH Adelson

US Patent 10,965,854, 2021

|

1</a > | 2021 |

| Digger finger: Gelsight tactile sensor for object identification inside granular media</a > R Patel, R Ouyang, B Romero, E Adelson

Experimental Robotics: The 17th International Symposium,

105-115, 2021 |

16</a > | 2021 |

| Swingbot: Learning physical features from in-hand tactile exploration for dynamic swing-up manipulation</a > C Wang, S Wang, B Romero, F Veiga, E Adelson

2020 IEEE/RSJ International Conference on Intelligent Robots and

Systems …, 2020 |

78</a > | 2020 |

| Exoskeleton-covered soft finger with vision-based proprioception and tactile sensing</a > Y She, SQ Liu, P Yu, E Adelson

2020 ieee international conference on robotics and automation

(icra), 10075 …, 2020 |

45</a > | 2020 |

| Soft, round, high resolution tactile fingertip sensors for dexterous robotic manipulation</a > B Romero, F Veiga, E Adelson

2020 IEEE International Conference on Robotics and Automation

(ICRA), 4796-4802, 2020 |

60</a > | 2020 |

| Continuous contact-based three-dimensional measurement</a >

EH Adelson, MK Johnson, J Rohaly

US Patent 10,574,944, 2020

|

6</a > | 2020 |

| Design of a fully actuated robotic hand with multiple gelsight tactile sensors</a > A Wilson, S Wang, B Romero, E Adelson

arXiv preprint arXiv:2002.02474, 2020

|

24</a > | 2020 |

| Surface topography measurement systems</a >

J Rohaly, EH Adelson

US Patent App. 16/491,032, 2020

|

3</a > | 2020 |

| Exoskeleton-covered soft finger with vision-based proprioception and exteroception</a > Y She, SQ Liu, P Yu, E Adelson

arXiv preprint arXiv:1910.01287 4, 2019

|

5</a > | 2019 |

| End-to-end pixelwise surface normal estimation with convolutional neural networks and shape reconstruction using GelSight sensor</a > J Li, S Dong, EH Adelson

2018 IEEE International Conference on Robotics and Biomimetics

(ROBIO), 1292 …, 2018 |

16</a > | 2018 |

| Robust plant phenotyping via model-based optimization</a >

P Sodhi, H Sun, B Póczos, D Wettergreen

2018 IEEE/RSJ International Conference on Intelligent Robots and

Systems …, 2018 |

7</a > | 2018 |

| 3d shape perception from monocular vision, touch, and shape priors</a > S Wang, J Wu, X Sun, W Yuan, WT Freeman, JB Tenenbaum, EH Adelson

2018 IEEE/RSJ International Conference on Intelligent Robots and

Systems …, 2018 |

91</a > | 2018 |

| Gelslim: A high-resolution, compact, robust, and calibrated tactile-sensing finger</a > E Donlon, S Dong, M Liu, J Li, E Adelson, A Rodriguez

2018 IEEE/RSJ International Conference on Intelligent Robots and

Systems …, 2018 |

238</a > | 2018 |

| More than a feeling: Learning to grasp and regrasp using vision and touch</a > R Calandra, A Owens, D Jayaraman, J Lin, W Yuan, J Malik, EH

Adelson, … IEEE Robotics and Automation Letters 3 (4), 3300-3307, 2018</span >

|

313</a > | 2018 |

| Slip detection with combined tactile and visual information</a >

J Li, S Dong, E Adelson

2018 IEEE International Conference on Robotics and Automation

(ICRA), 7772-7777, 2018 |

127</a > | 2018 |

| Vitac: Feature sharing between vision and tactile sensing for cloth texture recognition</a > S Luo, W Yuan, E Adelson, AG Cohn, R Fuentes

2018 IEEE International Conference on Robotics and Automation

(ICRA), 2722-2727, 2018 |

115</a > | 2018 |

| Active clothing material perception using tactile sensing and deep learning</a > W Yuan, Y Mo, S Wang, EH Adelson

2018 IEEE International Conference on Robotics and Automation

(ICRA), 4842-4849, 2018 |

115</a > | 2018 |

| Road-segmentation-based curb detection method for self-driving via a 3D-LiDAR sensor</a > Y Zhang, J Wang, X Wang, JM Dolan

IEEE transactions on intelligent transportation systems 19 (12),

3981-3991, 2018 |

146</a > | 2018 |

| Cloth texture recognition using vision and tactile sensing</a >

S Luo, W Yuan, E Adelson, AG Cohn, R Fuentes

ICRA 2018 workshop: Active touch for perception and interaction: how

nature …, 2018 |

3</a > | 2018 |

| Gelsight: High-resolution robot tactile sensors for estimating geometry and force</a > W Yuan, S Dong, EH Adelson

Sensors 17 (12), 2762, 2017

|

681</a > | 2017 |

| The feeling of success: Does touch sensing help predict grasp outcomes?</a > R Calandra, A Owens, M Upadhyaya, W Yuan, J Lin, EH Adelson, …

arXiv preprint arXiv:1710.05512, 2017

|

181</a > | 2017 |

| Improved gelsight tactile sensor for measuring geometry and slip</a >

S Dong, W Yuan, EH Adelson

2017 IEEE/RSJ International Conference on Intelligent Robots and

Systems …, 2017 |

200</a > | 2017 |

| Tracking objects with point clouds from vision and touch</a >

G Izatt, G Mirano, E Adelson, R Tedrake

2017 IEEE International Conference on Robotics and Automation

(ICRA), 4000-4007, 2017 |

78</a > | 2017 |

| Shape-independent hardness estimation using deep learning and a gelsight tactile sensor</a > W Yuan, C Zhu, A Owens, MA Srinivasan, EH Adelson

2017 IEEE International Conference on Robotics and Automation

(ICRA), 951-958, 2017 |

164</a > | 2017 |

| Visual wetness perception based on image color statistics</a >

M Sawayama, EH Adelson, S Nishida

Journal of vision 17 (5), 7-7, 2017

|

47</a > | 2017 |

| High-resolution surface measurement systems and methods</a >

EH Adelson, MK Johnson

US Patent 9,538,056, 2017

|

5</a > | 2017 |

| Development of GelSight: A High-resolution Tactile Sensor for Measuring Geometry and Force</a > W Yuan, S Dong, EH Adelson

|

2017 | |

| Connecting look and feel: Associating the visual and tactile properties of physical materials</a > W Yuan, S Wang, S Dong, E Adelson

Proceedings of the IEEE Conference on Computer Vision and

Pattern …, 2017 |

109</a > | 2017 |

| Motion, Early Vision, and the Plenoptic Function</a >

EH Adelson

Frontiers in Optics, FTh4I. 3, 2016

|

2016 | |

| Estimating object hardness with a gelsight touch sensor</a >

W Yuan, MA Srinivasan, EH Adelson

2016 IEEE/RSJ International Conference on Intelligent Robots and

Systems …, 2016 |

76</a > | 2016 |

| Can you see what you feel? Color and folding properties affect visual–tactile material discrimination of fabrics</a > B Xiao, W Bi, X Jia, H Wei, EH Adelson

Journal of vision 16 (3), 34-34, 2016

|

55</a > | 2016 |

| Can you see what you feel? Color and folding properties affect visual–tactile material discrimination of fabrics</a > EH Adelson, B Xiao, W Bi, X Jia, H Wei

American University, 2016

|

2016 | |

| Geometric Mechanics for Continuous Swimmers on Granular Material</a >

J Dai, H Faraji, P Schiebel, C Gong, M Travers, R Hatton, D Goldman,

… APS March Meeting Abstracts 2016, V40. 004, 2016</span >

|

2016 | |

| Visually indicated sounds</a >

A Owens, P Isola, J McDermott, A Torralba, EH Adelson, WT Freeman

Proceedings of the IEEE conference on computer vision and

pattern …, 2016 |

410</a > | 2016 |

| Learning visual groups from co-occurrences in space and time</a >

P Isola, D Zoran, D Krishnan, EH Adelson

arXiv preprint arXiv:1511.06811, 2015

|

129</a > | 2015 |

| Band-sifting decomposition for image-based material editing</a >

I Boyadzhiev, K Bala, S Paris, E Adelson

ACM Transactions on Graphics (TOG) 34 (5), 1-16, 2015</span >

|

61</a > | 2015 |

| Talk abstract: Computational lighting design and band-sifting operators</a > S Paris, I Boyadzhiev, K Bala, EH Adelson

2015 IEEE International Conference on Image Processing (ICIP),

36-37, 2015 |

2015 | |

| High-resolution surface measurement systems and methods</a >

EH Adelson, MK Johnson

US Patent 9,127,938, 2015

|

17</a > | 2015 |

| Modifying material appearance with bandsifting operators</a >

E Adelson, I Boyadzhiev, S Paris, K Bala

PERCEPTION 44, 85-85, 2015

|

2015 | |

| Tactile sensor using elastomeric imaging</a >

E Adelson

US Patent App. 14/045,594, 2015

|

1</a > | 2015 |

| Tactile sensor using elastomeric imaging</a >

E Adelson

US Patent App. 14/045,668, 2015

|

1</a > | 2015 |

| Measurement of shear and slip with a GelSight tactile sensor</a >

W Yuan, R Li, MA Srinivasan, EH Adelson

2015 IEEE International Conference on Robotics and Automation

(ICRA), 304-311, 2015 |

251</a > | 2015 |

| Technical Perspective Image Processing Goes Back to Basics</a >

E Adelson

COMMUNICATIONS OF THE ACM 58 (3), 80-80, 2015</span >

|

2015 | |

| Image processing goes back to basics: technical perspective</a >

E Adelson

Communications of the ACM 58 (3), 80-80, 2015</span >

|

2015 | |

| Discovering states and transformations in image collections</a >

P Isola, JJ Lim, EH Adelson

Proceedings of the IEEE conference on computer vision and

pattern …, 2015 |

215</a > | 2015 |

| On the appearance of translucent edges</a >

I Gkioulekas, B Walter, EH Adelson, K Bala, T Zickler

Proceedings of the ieee conference on computer vision and

pattern …, 2015 |

40</a > | 2015 |

| Sparklevision: Seeing the world through random specular microfacets</a > Z Zhang, P Isola, EH Adelson

Proceedings of the IEEE Conference on Computer Vision and

Pattern …, 2015 |

3</a > | 2015 |

| Sparkle vision: Seeing the world through random specular microfacets</a > Z Zhang, P Isola, EH Adelson

arXiv preprint arXiv:1412.7884, 2014

|

4</a > | 2014 |

| Tactile sensor using elastomeric imaging</a >

E Adelson

US Patent App. 14/045,647, 2014

|

1</a > | 2014 |

| Tactile sensor using elastomeric imaging</a >

E Adelson

US Patent App. 14/045,620, 2014

|

2</a > | 2014 |

| Localization and manipulation of small parts using gelsight tactile sensing</a > R Li, R Platt, W Yuan, A Ten Pas, N Roscup, MA Srinivasan, E Adelson

2014 IEEE/RSJ International Conference on Intelligent Robots and

Systems …, 2014 |

216</a > | 2014 |

| Puffball Part Segmentation: Psychophysical and Statistical Evaluation</a > N Twarog, E Adelson

Journal of Vision 14 (10), 893-893, 2014

|

2014 | |

| Seeing in Shadeworld</a >

E Adelson, P Isola

Journal of Vision 14 (10), 719-719, 2014

|

2014 | |

| Looking against the light: how perception of translucency depends on lighting direction and phase function</a > B Xiao, B Walter, I Gkioukelas, T Zickler, E Adelson, K Bala

Journal of Vision 14 (10), 1316-1316, 2014</span >

|

3</a > | 2014 |

| Accuracy and speed of material categorization in real-world images</a > L Sharan, R Rosenholtz, EH Adelson

Journal of vision 14 (9), 12-12, 2014

|

162</a > | 2014 |

| Tactile sensor using elastomeric imaging</a >

E Adelson

US Patent App. 13/971,456, 2014

|

2</a > | 2014 |

| Methods of and Systems for Three-Dimensional Digital Impression and Visualization of Objects Through an Elastomer</a > J Rohaly, MK Johnson, EH Adelson

US Patent App. 14/056,817, 2014

|

26</a > | 2014 |

| Looking against the light: How perception of translucency depends on lighting direction</a > B Xiao, B Walter, I Gkioulekas, T Zickler, E Adelson, K Bala

Journal of vision 14 (3), 17-17, 2014

|

89</a > | 2014 |

| Crisp boundary detection using pointwise mutual information</a >

P Isola, D Zoran, D Krishnan, EH Adelson

Computer Vision–ECCV 2014: 13th European Conference, Zurich,

Switzerland …, 2014 |

232</a > | 2014 |

| Optimal Gait Design for Systems With Drift on SO (3)</a >

M Travers, H Choset

Dynamic Systems and Control Conference 56130, V002T33A005, 2013</span >

|

2013 | |

| Understanding the role of phase function in translucent appearance</a > I Gkioulekas, B Xiao, S Zhao, EH Adelson, T Zickler, K Bala

ACM Transactions on graphics (TOG) 32 (5), 1-19, 2013</span >

|

91</a > | 2013 |

| Can you see what you feel? Tactile and visual matching of material properties of fabrics</a > B Xiao, X Jia, E Adelson

Journal of Vision 13 (9), 197-197, 2013

|

2</a > | 2013 |

| Recognizing materials using perceptually inspired features</a >

L Sharan, C Liu, R Rosenholtz, EH Adelson

International journal of computer vision 103, 348-371, 2013</span >

|

233</a > | 2013 |

| Lump detection with a gelsight sensor</a >

X Jia, R Li, MA Srinivasan, EH Adelson

2013 World Haptics Conference (WHC), 175-179, 2013</span >

|

28</a > | 2013 |

| Tactile sensor using elastomeric imaging</a >

EH Adelson

US Patent 8,411,140, 2013

|

62</a > | 2013 |

| Sensing and recognizing surface textures using a gelsight sensor</a >

R Li, EH Adelson

Proceedings of the IEEE Conference on Computer Vision and

Pattern …, 2013 |

138</a > | 2013 |

| Playing with puffball: simple scale-invariant inflation for use in vision and graphics</a > NR Twarog, MF Tappen, EH Adelson

Proceedings of the ACM symposium on applied perception, 47-54, 2012</span >

|

20</a > | 2012 |

| A unified approach to estimating shape from images</a >

P Isola, F Cole, B Freeman, F Durand, E Adelson

Journal of Vision 12 (9), 276-276, 2012

|

2012 | |

| Effects of shape and color on the perception of translucency</a >

B Xiao, I Gkioulekas, A Dunn, S Zhao, E Adelson, T Zickler, K Bala

Journal of Vision 12 (9), 948-948, 2012

|

3</a > | 2012 |

| Estimating material by estimating shape</a >

E Adelson, F Cole, P Isola, W Freeman, F Durand

Journal of Vision 12 (9), 946-946, 2012

|

2012 | |

| Shapecollage: Occlusion-aware, example-based shape interpretation</a > F Cole, P Isola, WT Freeman, F Durand, EH Adelson

Computer Vision–ECCV 2012: 12th European Conference on Computer

Vision …, 2012 |

20</a > | 2012 |

| Speed of Material vs. Object Recognition Depends upon Viewing Condition</a > B Xiao, L Sharan, R Rosenholtz, E Adelson

Journal of Vision 11 (15), 19-19, 2011

|

2</a > | 2011 |

| deForm: an interactive malleable surface for capturing 2.5 D arbitrary objects, tools and touch</a > S Follmer, M Johnson, E Adelson, H Ishii

Proceedings of the 24th annual ACM symposium on User interface

software and …, 2011 |

53</a > | 2011 |

| Interpreting line drawings of smooth shapes</a >

F Cole, F Durand, B Freeman, E Adelson

Journal of Vision 11 (11), 47-47, 2011

|

3</a > | 2011 |

| Material recognition is fast, but not superfast</a >

E Adelson, L Sharan, R Rosenholtz

Journal of Vision 11 (11), 402-402, 2011

|

2</a > | 2011 |

| Segmenting 2D Shapes using 3D Inflation</a >

NR Twarog, EH Adelson, MF Tappen

Journal of Vision 11 (11), 851-851, 2011

|

2011 | |

| Microgeometry capture using an elastomeric sensor</a >

MK Johnson, F Cole, A Raj, EH Adelson

ACM Transactions on Graphics (TOG) 30 (4), 1-8, 2011</span >

|

230</a > | 2011 |

| Visualizing and measuring detailed shape and texture with an elastomeric sensor</a > EH Adelson, MK Johnson

Computational Optical Sensing and Imaging, JTuD2, 2011</span >

|

2</a > | 2011 |

| Surface Metrology using an Elastomeric Sensor</a >

MK Johnson, EH Adelson

Applied Industrial Optics: Spectroscopy, Imaging and Metrology,

AITuB4, 2011 |

2011 | |

| Shape estimation in natural illumination</a >

MK Johnson, EH Adelson

CVPR 2011, 2553-2560, 2011

|

171</a > | 2011 |

| A computational model for material recognition</a >

L Sharan, C Liu, R Rosenholtz, E Adelson

Journal of Vision 10 (7), 987-987, 2010

|

2010 | |

| Exploring features in a bayesian framework for material recognition</a > C Liu, L Sharan, EH Adelson, R Rosenholtz

2010 ieee computer society conference on computer vision and

pattern …, 2010 |

319</a > | 2010 |

| Personal photo enhancement using example images.</a >

N Joshi, W Matusik, EH Adelson, DJ Kriegman

ACM Trans. Graph. 29 (2), 12:1-12:15, 2010</span >

|

122</a > | 2010 |

| Shape from Sheen</a >

EH Adelson, A Torralba, RW Fleming

|

1</a > | 2009 |

| Ground truth dataset and baseline evaluations for intrinsic image algorithms</a > R Grosse, MK Johnson, EH Adelson, WT Freeman

2009 IEEE 12th International Conference on Computer Vision,

2335-2342, 2009 |

515</a > | 2009 |

| An interactive” retrographic sensor” for touch, texture, and shape</a > A Raj, MK Johnson, EH Adelson

ACM SIGGRAPH 2009 Emerging Technologies, 1-1, 2009</span >

|

2009 | |

| The effect of color saturation and luminance contrast on color naturalness</a > L Nakano, T Takeuchi, I Motoyoshi, Y Li, E Adelson, S Nishida

Journal of vision 9 (8), 1040-1040, 2009

|

4</a > | 2009 |

| Material perception: What can you see in a brief glance?</a >

L Sharan, R Rosenholtz, E Adelson

Journal of Vision 9 (8), 784-784, 2009

|

360</a > | 2009 |

| Retrographic sensing for the measurement of surface texture and shape</a > MK Johnson, EH Adelson

2009 IEEE Conference on Computer Vision and Pattern Recognition,

1070-1077, 2009 |

331</a > | 2009 |

| The Perception of Surfaces and Materials.</a >

EH Adelson

Color Imaging Conference, 1, 2009

|

2009 | |

| Shape from sheen</a >

RW Fleming, A Torralba, EH Adelson

Three dimensional shape perception. Q. Zaidi,(Ed.) Springer, 2009</span >

|

18</a > | 2009 |

| Personal photo enhancement using prior images</a >

N Joshi, W Matusik, EH Adelson, DJ Kriegman

ACM SIGGRAPH 2008 posters, 1-1, 2008

|

1</a > | 2008 |

| Human-assisted motion annotation</a >

C Liu, WT Freeman, EH Adelson, Y Weiss

2008 IEEE Conference on Computer Vision and Pattern Recognition,

1-8, 2008 |

261</a > | 2008 |

| ScribbleBoost: Adding Classification to Edge‐Aware Interpolation of Local Image and Video Adjustments</a > Y Li, E Adelson, A Agarwala

Computer Graphics Forum 27 (4), 1255-1264, 2008</span >

|

75</a > | 2008 |

| Human-assisted motion annotation for real-world videos</a >

C Liu, E Adelson, W Freeman

Journal of Vision 8 (6), 679-679, 2008

|

2008 | |

| Eye movements for shape and material perception</a >

L Sharan, R Rosenholtz, EH Adelson

Journal of Vision 8 (6), 219-219, 2008

|

9</a > | 2008 |

| Image statistics for 3D shape estimation</a >

R Fleming, Y Li, E Adelson

Journal of Vision 8 (6), 76-76, 2008

|

6</a > | 2008 |

| Do colored highlights look like highlights</a >

S Nishida, I Motoyoshi, L Nakano, Y Li, L Sharan, E Adelson

Journal of Vision 8 (6), 339, 2008

|

26</a > | 2008 |

| Image statistics for surface reflectance perception</a >

L Sharan, Y Li, I Motoyoshi, S Nishida, EH Adelson

JOSA A 25 (4), 846-865, 2008

|

147</a > | 2008 |

| Image mapping using local and global statistics</a >

Y Li, E Adelson

Human Vision and Electronic Imaging XIII 6806, 399-409, 2008</span >

|

9</a > | 2008 |

| Image statistics and surface perception</a >

EH Adelson

Human vision and electronic imaging XIII 6806, 13-21, 2008</span >

|

26</a > | 2008 |

| Image mapping using local and global statistics [6806-38]</a >

Y Li, E Adelson

PROCEEDINGS-SPIE THE INTERNATIONAL SOCIETY FOR OPTICAL ENGINEERING

6806, 6806, 2008 |

2008 | |

| Image statistics and surface perception (Keynote Paper)[6806-01]</a >

EH Adelson

PROCEEDINGS-SPIE THE INTERNATIONAL SOCIETY FOR OPTICAL ENGINEERING

6806, 6806, 2008 |

2008 | |

| Apparent ridges for line drawing</a >

T Judd, F Durand, E Adelson

ACM transactions on graphics (TOG) 26 (3), 19-es, 2007</span >

|

370</a > | 2007 |

| Learning gaussian conditional random fields for low-level vision</a >

MF Tappen, C Liu, EH Adelson, WT Freeman

2007 IEEE Conference on Computer Vision and Pattern Recognition,

1-8, 2007 |

210</a > | 2007 |

| Histogram skewness is useful and easily computed in neural hardware</a > L Sharan, E Adelson, I Motoyoshi, S Nishida

Journal of Vision 7 (9), 966-966, 2007

|

2007 | |

| Imposing both local and global image statistics leads to perceptually improved superresolution</a > Y Li, E Adelson

Journal of Vision 7 (9), 965-965, 2007

|

2007 | |

| Image statistics and the perception of surface qualities</a >

I Motoyoshi, S Nishida, L Sharan, EH Adelson

Nature 447 (7141), 206-209, 2007

|

731</a > | 2007 |

| Where do you draw the lines?</a >

E Adelson, T Judd, F Durand

PERCEPTION 36, 104-104, 2007

|

2007 | |

| Non-oriented filters are better than oriented filters for skewness detection</a > L Sharan, EH Adelson, I Motoyoshi, S Nishida

Perception 36 (6a), 206-209, 2007

|

4</a > | 2007 |

| Estimating intrinsic component images using non-linear regression</a > MF Tappen, EH Adelson, WT Freeman

2006 IEEE Computer Society Conference on Computer Vision and

Pattern …, 2006 |

134</a > | 2006 |

| Learning the statistics of illumination and reflectance</a >

EH Adelson, MF Tappen, WT Freeman, Y Li

Journal of Vision 6 (6), 102-102, 2006

|

2006 | |

| Image statistics for surface reflectance estimation</a >

L Sharan, Y Li, EH Adelson

Journal of Vision 6 (6), 101-101, 2006

|

2</a > | 2006 |

| Analysis of contour motions</a >

C Liu, W Freeman, E Adelson

Advances in Neural Information Processing Systems 19, 2006</span >

|

45</a > | 2006 |

| Image statistics as a determinant of reflectance perception</a >

I Motoyoshi, S Nishida, EH Adelson

Journal of Vision 5 (8), 569-569, 2005

|

5</a > | 2005 |

| Image statistics and reflectance estimation</a >

L Sharan, Y Li, EH Adelson

Journal of Vision 5 (8), 375-375, 2005

|

5</a > | 2005 |

| Perceptually based range compression for high dynamic range images</a > Y Li, L Sharan, EH Adelson

Journal of Vision 5 (8), 598-598, 2005

|

11</a > | 2005 |

| Luminance re-mapping for the control of apparent material</a >

I Motoyoshi, S Nishida, EH Adelson

Proceedings of the 2nd symposium on Applied perception in graphics

and …, 2005 |

18</a > | 2005 |

| Motion magnification</a >

C Liu, A Torralba, WT Freeman, F Durand, EH Adelson

ACM transactions on graphics (TOG) 24 (3), 519-526, 2005</span >

|

496</a > | 2005 |

| Compressing and companding high dynamic range images with subband architectures</a > Y Li, L Sharan, EH Adelson

ACM transactions on graphics (TOG) 24 (3), 836-844, 2005</span >

|

368</a > | 2005 |

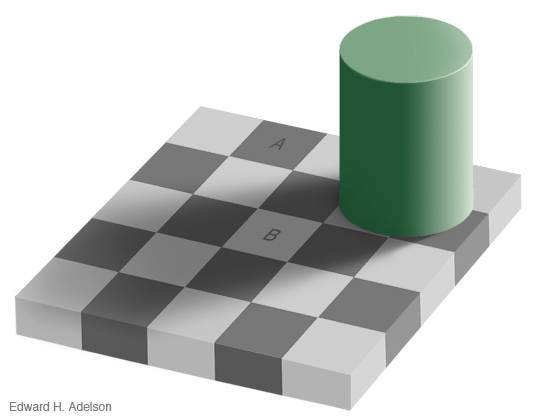

| Checkershadow illusion. 1995</a >

EH Adelson

URL http://web. mit.

edu/persci/people/adelson/checkershadow_illusion. html, 2005</span > |

21</a > | 2005 |

| Material Perception</a >

EH Adelson, L Sharan, Y Li

CSAIL Research Abstract, 2005

|

1</a > | 2005 |

| Adaptation to skewed image statistics alters the perception of glossiness and lightness</a > I Motoyoshi, S Nishida, EH Adelson

PERCEPTION 34, 24-24, 2005

|

6</a > | 2005 |

| Straightness as a cue for luminance edge interpretation</a >

AD Logvinenko, EH Adelson, DA Ross, D Somers

Perception & Psychophysics 67 (1), 120-128, 2005</span >

|

19</a > | 2005 |

| Motion perception and mid-level vision</a >

J McDermott, EH Adelson

The cognitive neurosciences III, 369-383, 2004</span >

|

8</a > | 2004 |

| The geometry of the occluding contour and its effect on motion interpretation</a > J McDermott, EH Adelson

Journal of Vision 4 (10), 9-9, 2004

|

38</a > | 2004 |

| Statistical characterization of real-world illumination</a >

RO Dror, AS Willsky, EH Adelson

Journal of Vision 4 (9), 11-11, 2004

|

170</a > | 2004 |

| Specular reflections and the perception of shape</a >

RW Fleming, A Torralba, EH Adelson

Journal of vision 4 (9), 10-10, 2004

|

369</a > | 2004 |

| Junctions and cost functions in motion interpretation</a >

J McDermott, EH Adelson

Journal of Vision 4 (7), 3-3, 2004

|

19</a > | 2004 |

| Image statistics for material perception</a >

EH Adelson, Y Li, L Sharan

Journal of Vision 4 (8), 123-123, 2004

|

7</a > | 2004 |

| 9.35 Sensation and Perception, Spring 2004</a >

EH Adelson

|

2004 | |

| Genericity and junctions in motion interpretation</a >

J McDermott, EH Adelson

Journal of Vision 3 (9), 480, 2003

|

2003 | |

| Textural statistics and surface perception.</a >

EH Adelson

Journal of Vision 3 (9), 48-48, 2003

|

3</a > | 2003 |

| How image statistics drive shape-from-texture and shape-from-specularity</a > RW Fleming, A Torralba, RO Dror, EH Adelson

Journal of Vision 3 (9), 73-73, 2003

|

7</a > | 2003 |

| Real-world illumination and the perception of surface reflectance properties</a > RW Fleming, RO Dror, EH Adelson

Journal of vision 3 (5), 3-3, 2003

|

524</a > | 2003 |

| Energy versus synchrony in perceptual grouping</a >

H Farid, EH Adelson

Journal of Vision 2 (7), 703-703, 2002

|

2002 | |

| Motion illusions as optimal percepts</a >

Y Weiss, EP Simoncelli, EH Adelson

Nature neuroscience 5 (6), 598-604, 2002

|

1303</a > | 2002 |

| Recovering shading and reflectance from a single image</a >

MF Tappen

Massachusetts Institute of Technology, 2002</span >

|

8</a > | 2002 |

| Recovering intrinsic images from a single image</a >

M Tappen, W Freeman, E Adelson

Advances in neural information processing systems 15, 2002</span >

|

617</a > | 2002 |

| Statistics of real-world illumination</a >

RO Dror, TK Leung, EH Adelson, AS Willsky

Proceedings of the 2001 IEEE Computer Society Conference on Computer

Vision …, 2001 |

72</a > | 2001 |

| Surface reflectance estimation under unknown natural illumination</a > RW Fleming, RO Dror, EH Adelson

Journal of Vision 1 (3), 43-43, 2001

|

4</a > | 2001 |

| Straightness, structure, and shadows</a >

E Adelson, D Somers

Journal of Vision 1 (3), 204-204, 2001

|

4</a > | 2001 |

| How do humans determine reflectance properties under unknown illumination?</a > RW Fleming, RO Dror, EH Adelson

|

38</a > | 2001 |

| Recognition of surface reflectance properties from a single image under unknown real-world illumination</a > RO Dror, EH Adelson, AS Willsky

|

77</a > | 2001 |

| Surface reflectance estimation and natural illumination statistics</a > RO Dror, EH Adelson, AS Willsky

|

28</a > | 2001 |

| Synchrony does not promote grouping in temporally structured displays</a > H Farid, EH Adelson

Nature Neuroscience 4 (9), 875-876, 2001

|

53</a > | 2001 |

| Beyond junctions: nonlocal form constraints on motion interpretation</a > J McDermott, Y Weiss, EH Adelson

Perception 30 (8), 905-923, 2001

|

91</a > | 2001 |

| Estimating surface reflectance properties from images under unknown illumination</a > RO Dror, EH Adelson, AS Willsky

Human Vision and Electronic Imaging VI 4299, 231-242, 2001</span >

|

48</a > | 2001 |

| On seeing stuff: the perception of materials by humans and machines</a > EH Adelson

Human vision and electronic imaging VI 4299, 1-12, 2001</span >

|

410</a > | 2001 |

| Genericity, not junctions: Insights from moving crosses</a >

J McDermott, EH Adelson, Y Weiss

PERCEPTION 30, 101-101, 2001

|

2001 | |

| Using image statistics in material perception</a >

EH Adelson, R Fleming, R Dror

PERCEPTION 30, 96-96, 2001

|

2001 | |

| Adventures with gelatinous ellipses—constraints on models of human motion analysis</a > Y Weiss, EH Adelson

Perception 29 (5), 543-566, 2000

|

50</a > | 2000 |

| Standard mechanisms can explain grouping in temporally synchronous displays</a > H Farid, EH Adelson

INVESTIGATIVE OPHTHALMOLOGY & VISUAL SCIENCE 41 (4),

S438-S438, 2000 |

2</a > | 2000 |

| The computation of occlusion for motion perception</a >

J McDermott, Y Weiss, EH Adelson

Investigative Ophthalmology & Visual Science 41 (4),

S544-S544, 2000 |

1</a > | 2000 |

| Temporal dynamics reveal multiple mechanisms of brightness perception.</a > DC Somers, KR Nichols, EH Adelson

INVESTIGATIVE OPHTHALMOLOGY & VISUAL SCIENCE 41 (4),

S956-S956, 2000 |

1</a > | 2000 |

| Buttons, bevels, and shadows.</a >

EH Adelson

INVESTIGATIVE OPHTHALMOLOGY & VISUAL SCIENCE 41 (4),

S741-S741, 2000 |

2000 | |

| Lightness perceptions and lightness illusions. The new cognitive sciences, M. Gazzaniga</a > EH Adelson

MIT Press, 2000

|

9</a > | 2000 |

| Lightness perception and lightness illusions. The new cognitive neurosciences</a > EH Adelson

Cambridge, MA: MIT Press, 2000

|

36</a > | 2000 |

| The new cognitive neurosciences</a >

EH Adelson, M Gazzaniga

Lightness perception and lightness illusions, 339-351, 2000</span >

|

78</a > | 2000 |

| Shadows are fuzzy and straight; paint is sharp and crooked</a >

EH Adelson, D Somers

Perception 29, 46-46, 2000

|

5</a > | 2000 |

| Filtering reveals form in temporally structured displays</a >

EH Adelson, H Farid

Science 286 (5448), 2231-2231, 1999

|

32</a > | 1999 |

| Separating reflections from images by use of independent component analysis</a > H Farid, EH Adelson

JOSA A 16 (9), 2136-2145, 1999

|

200</a > | 1999 |

| Separating reflections and lighting using independent components analysis</a > H Farid, EH Adelson

Proceedings. 1999 IEEE Computer Society Conference on Computer

Vision and …, 1999 |

196</a > | 1999 |

| Amodal completion and depth segregation in motion integration</a >

J McDermott, Y Weiss, EH Adelson

INVESTIGATIVE OPHTHALMOLOGY & VISUAL SCIENCE 40 (4),

S390-S390, 1999 |

1999 | |

| Analyzing junctions with atmospheric transfer functions</a >

EH Adelson

Investigative Ophthalmology & Visual Science 40 (4),

S987-S987, 1999 |

1</a > | 1999 |

| TECHNICAL COMMENTS-Filtering Reveals Form in Temporally Structured Displays</a > EH Adelson, H Farid

Science-International Edition-AAAS 286 (5448), 2361, 1999</span >

|

1999 | |

| Image Processing-Separating reflections from images by use of independent component analysis</a > H Farid, EH Adelson

Journal of the Optical Society of America-A-Optics Image Science and

Vision …, 1999 |

1999 | |

| Articulation and scene statistics</a >

EH Adelson

Perception 28, 17-17, 1999

|

1</a > | 1999 |

| The computation of occlusion for motion perception</a >

J McDermott, Y Weiss, EH Adelson

PERCEPTION 28, 80-80, 1999

|

1</a > | 1999 |

| Method and apparatus for enhancing images using helper signals</a >

B Girod, EH Adelson

US Patent 5,818,972, 1998

|

20</a > | 1998 |

| Subband texture synthesis for image coding</a >

SY Yoon, EH Adelson

Human Vision and Electronic Imaging III 3299, 489-497, 1998</span >

|

16</a > | 1998 |

| Slow and smooth: A Bayesian theory for the combination of local motion signals in human vision</a > Y Weiss, EH Adelson

|

162</a > | 1998 |

| Layered representation for image coding</a >

EH Adelson

US Patent 5,706,417, 1998

|

110</a > | 1998 |

| What makes a good T junction?</a >

J McDermott, Y Weiss, EH Adelson

Perception 27, 40-41, 1998

|

1</a > | 1998 |

| Atmospheric boundaries in lightness perception</a >

EH Adelson, DC Somers

Perception 26 (1_suppl), 60-60, 1997

|

2</a > | 1997 |

| Doggone Dalmatian!</a >

P Sinha, E Adelson

Perception 26 (5), 667-667, 1997

|

1</a > | 1997 |

| Surface perception and motion integration</a >

J McDermott, Y Weiss, EH Adelson

Investigative Ophthalmology & Visual Science 38 (4),

1108-1108, 1997 |

5</a > | 1997 |

| Junctions, transparency, and brightness</a >

DC Somers, EH Adelson

Investigative Ophthalmology & Visual Science 38 (4),

2126-2126, 1997 |

17</a > | 1997 |

| Vector averaging as Bayesian IOC</a >

Y Weiss, EH Adelson

Investigative Ophthalmology & Visual Science 38 (4),

4358-4358, 1997 |

3</a > | 1997 |

| Noise removal via Bayesian wavelet coring</a >

EP Simoncelli, EH Adelson

Proceedings of 3rd IEEE International Conference on Image Processing

1, 379-382, 1996 |

991</a > | 1996 |

| System for encoding image data into multiple layers representing regions of coherent motion and associated motion parameters</a > JYA Wang, EH Adelson

US Patent 5,557,684, 1996

|

268</a > | 1996 |

| The perception of shading and reflectance</a >

EH Adelson, AP Pentland

Perception as Bayesian inference 409, 423, 1996</span >

|

306</a > | 1996 |

| Layers and alpha maps as visual representations</a >

EH Adelson, JYA Wang

Perception 25 (1_suppl), 5-5, 1996

|

1</a > | 1996 |

| Interaction of Multiple Surface Cues in Motion Grouping</a >

Y Weiss, EH Adelson

Perception 25 (1_suppl), 178-178, 1996

|

1</a > | 1996 |

| A unified mixture framework for motion segmentation: Incorporating spatial coherence and estimating the number of models</a > Y Weiss, EH Adelson

Proceedings CVPR IEEE Computer Society Conference on Computer Vision

and …, 1996 |

378</a > | 1996 |

| Noise reduction system</a >

EH Adelson, WT Freeman

US Patent 5,526,446, 1996

|

163</a > | 1996 |

| Layers, warps, and alpha maps in mid-level vision</a >

EH Adelson, JYA Wang

INVESTIGATIVE OPHTHALMOLOGY & VISUAL SCIENCE 37 (3),

2122-2122, 1996 |

1</a > | 1996 |

| Integration and segmentation of non-rigid motion: a computational model</a > Y Weiss, EH Adelson

Investigative Ophthalmology & Visual Science 37 (3),

3395-3395, 1996 |

1</a > | 1996 |

| Motion estimation and segmentation using a recurrent mixture of experts architecture</a > Y Weiss, EH Adelson

Proceedings of 1995 IEEE Workshop on Neural Networks for Signal

Processing …, 1995 |

18</a > | 1995 |

| Layered representations for vision and video</a >

EH Adelson

Proceedings IEEE Workshop on Representation of Visual Scenes (In

Conjunction …, 1995 |

46</a > | 1995 |

| Integration and segmentation of nonrigid motion</a >

Y Weiss, EH Adelson

Investigative Ophthalmology & Visual Science 36 (4),

S228-S228, 1995 |

2</a > | 1995 |

| The analysis of moving visual patterns 1986. In Pattern Recognition Mechanisms, edited by C. Chagas, R. Gattass, and C. Gross. New York: Springer-Verlag</a > JA Movshon, EH Adelson, MS Gizzi, WT Newsome

Frontiers in Cognitive Neuroscience, 148, 1995</span >

|

1995 | |

| Checkershadow illusion</a >

EH Adelson

|

137</a > | 1995 |

| Adventures with gelatinous ellipses</a >

Y Weiss, EH Adelson

Perception 24, 31-31, 1995

|

2</a > | 1995 |

| Perceptually organized EM: A framework for motion segmentation that combines information about form and motion</a > Y Weiss, EH Adelson

Media Laboratory, Massachusetts Institute of Technology, 1995</span >

|

81</a > | 1995 |

| Transparent motion perception as detection of unbalanced motion signals. III. Modeling</a > N Qian, RA Andersen, EH Adelson

Journal of Neuroscience 14 (12), 7381-7392, 1994</span >

|

174</a > | 1994 |

| Transparent motion perception as detection of unbalanced motion signals. I. Psychophysics</a > N Qian, RA Andersen, EH Adelson

Journal of Neuroscience 14 (12), 7357-7366, 1994</span >

|

371</a > | 1994 |

| Mid-level vision: New directions in vision and video</a >

EH Adelson, JYA Wang, SA Niyogi

Proceedings of 1st International Conference on Image Processing 2,

21-25, 1994 |

11</a > | 1994 |

| Analyzing gait with spatiotemporal surfaces</a >

SA Niyogi, EH Adelson

Proceedings of 1994 IEEE Workshop on Motion of Non-rigid and

Articulated …, 1994 |

234</a > | 1994 |

| Representing moving images with layers</a >

JYA Wang, EH Adelson

IEEE transactions on image processing 3 (5), 625-638, 1994</span >

|

1703</a > | 1994 |

| Analyzing and recognizing walking figures in XYT</a >

Niyogi, Adelson

1994 Proceedings of IEEE Conference on Computer Vision and

Pattern …, 1994 |

948</a > | 1994 |

| Applying mid-level vision techniques for video data compression and manipulation</a > J Wang, EH Adelson

Digital Video Compression on Personal Computers: Algorithms and

Technologies …, 1994 |

44</a > | 1994 |

| Spatio-temporal segmentation of video data</a >

J Wang, EH Adelson

Image and Video Processing II 2182, 120-131, 1994</span >

|

160</a > | 1994 |

| Remote influences on brightness illusions</a >

EH Adelson

Investigative Ophthalmology & Visual Science 35 (4),

1490-1490, 1994 |

3</a > | 1994 |

| Applying mid-level vision techniques for video data compression and manipulation [2187-11]</a > JYA Wang, EH Adelson

PROCEEDINGS-SPIE THE INTERNATIONAL SOCIETY FOR OPTICAL ENGINEERING,

116-116, 1994 |

1994 | |

| Spatio-temporal segmentation of video data [2182-12]</a >

JYA Wang, EH Adelson

PROCEEDINGS-SPIE THE INTERNATIONAL SOCIETY FOR OPTICAL ENGINEERING,

120-120, 1994 |

1994 | |

| Perceptual organization and the judgment of brightness</a >

EH Adelson

Science 262 (5142), 2042-2044, 1993

|

748</a > | 1993 |

| Lightness, transparency, and midlevel vision</a >

EH Adelson

OSA Annual Meeting, WJJ. 1, 1993

|

1993 | |

| Layered representation for motion analysis</a >

JYA Wang, EH Adelson

Proceedings of IEEE Conference on Computer Vision and Pattern

Recognition …, 1993 |

441</a > | 1993 |

| Verifying the’consistency’of shading patterns and 3-D structures</a >

P Sinha, E Adelson

[1993] Proceedings IEEE Workshop on Qualitative Vision, 71-80, 1993</span >

|

6</a > | 1993 |

| Recovering reflectance and illumination in a world of painted polyhedra</a > P Sinha, E Adelson

1993 (4th) International Conference on Computer Vision, 156-163, 1993</span >

|

150</a > | 1993 |

| Layered representation for image sequence coding</a >

JYA Wang, EH Adelson

1993 IEEE International Conference on Acoustics, Speech, and

Signal …, 1993 |

29</a > | 1993 |

| Optimal brightness patterns for 2-D optical flow</a >

EH Adelson, JL Prince

Acoustics, Speech, and Signal Processing, IEEE International

Conference on 5 …, 1993 |

1993 | |

| A computational model for the integration of form and motion</a >

GT CHOU, EH ADELSON

INVESTIGATIVE OPHTHALMOLOGY & VISUAL SCIENCE 34 (4),

1029-1029, 1993 |

1</a > | 1993 |

| Recovering 3D shapes from 2D line-drawings</a >

P Sinha, E Adelson

Intelligent Robotics; Proceedings of the International Symposium

on …, 1993 |

4</a > | 1993 |

| Motion, orientation, and early vision</a >

EH Adelson

Spatial Vision 7 (1), 88-88, 1993

|

1</a > | 1993 |

| Single-eye range estimation by using displaced apertures with color filters</a > Y Amari, EH Adelson

Proceedings of the 1992 International Conference on Industrial

Electronics …, 1992 |

26</a > | 1992 |

| Vision with equiluminant colour contrast: 2. A large-scale technique and observations</a > P Cavanagh, EH Adelson, P Heard

Perception 21 (2), 219-226, 1992

|

34</a > | 1992 |

| EXPLORING THE ARGYLE ILLUSION</a >

EH ADELSON

INVESTIGATIVE OPHTHALMOLOGY & VISUAL SCIENCE 33 (4),

1259-1259, 1992 |

1992 | |

| THE RECOVERY OF 3-D SHAPES FROM 2-D WIREFRAME DRAWINGS</a >

P Sinha, EH Adelson

Investigative Ophthalmology & Visual Science 33 (4),

825-825, 1992 |

10</a > | 1992 |

| Probabilistic contour analysis in a hypercolumn representation</a >

WT Freeman, EH Adelson

INVESTIGATIVE OPHTHALMOLOGY & VISUAL SCIENCE 33 (4),

825-825, 1992 |

1</a > | 1992 |

| Shiftable multiscale transforms</a >

EP Simoncelli, WT Freeman, EH Adelson, DJ Heeger

IEEE transactions on Information Theory 38 (2), 587-607, 1992</span >

|

2070</a > | 1992 |

| Directionally selective complex cells and the computation of motion energy in cat visual cortex</a > RC Emerson, JR Bergen, EH Adelson

Vision research 32 (2), 203-218, 1992

|

374</a > | 1992 |

| Single lens stereo with a plenoptic camera</a >

EH Adelson, JYA Wang

IEEE transactions on pattern analysis and machine intelligence 14

(2), 99-106, 1992 |

1509</a > | 1992 |

| A Model of Transparent Motion Perception Using Layers</a >

T DARREL

Investigative Opthalmology and Visual Science Supplement (ARVO 1992)

33, 1142, 1992 |

1</a > | 1992 |

| A computational model for perception of two-dimensional pattern velocities</a > EP Simoncelli, DJ Heeger, EH Adelson

Investigative Opthalmology and Visual Science Supplement 33,

1142, 1992 |

11</a > | 1992 |

| Optical ranging apparatus</a >

EH Adelson

US Patent 5,076,687, 1991

|

230</a > | 1991 |

| Layered representations for image coding</a >

EH Adelson

Vision and Modeling Group, Media Laboratory, Massachusetts Institute

of …, 1991 |

71</a > | 1991 |

| Steerable pyramids for image decomposition and enhancement</a >

WT Freeman, EH Adelson

OSA Annual Meeting, WV5, 1991

|

1991 | |

| The plenoptic function and the elements of early vision</a >

EH Adelson, JR Bergen

Computational models of visual processing 1 (2), 3-20, 1991</span >

|

2607</a > | 1991 |

| H., and William T</a >

E Adelson

Freeman,” The Design and Use of Steerable Filters,” IEEE T-PAMI 13,

891-906, 1991 |

2</a > | 1991 |

| The design and use of steerable filters</a >

WT Freeman, EH Adelson

IEEE Transactions on Pattern analysis and machine intelligence 13

(9), 891-906, 1991 |

4616</a > | 1991 |

| Mechanisms for motion perception</a >

E Adelson

Optics and Photonics News 2 (8), 24-30, 1991</span >

|

36</a > | 1991 |

| Motion without movement</a >

WT Freeman, EH Adelson, DJ Heeger

ACM Siggraph Computer Graphics 25 (4), 27-30, 1991</span >

|

201</a > | 1991 |

| Probability distributions of optical flow.</a >

EP Simoncelli, EH Adelson, DJ Heeger

CVPR 91, 310-315, 1991

|

593</a > | 1991 |

| Probability distributions of optical flow</a >

EH Adelson, EP Simoncelli, DJ Heeger

IEEE Conf. Comput. Vision and Pattern Recognition, 310315, 1991</span >

|

9</a > | 1991 |

| Pyramids and multiscale representations</a >

EH Adelson, EP Simoncelli, WT Freeman

Representations and Vision, Gorea A.,(Ed.). Cambridge University

Press …, 1991 |

45</a > | 1991 |

| Receiver-compatible enhanced definition television system</a >

WF Schreiber, AB Lippman, EH Adelson, AN Netravali

US Patent 5,010,405, 1991

|

223</a > | 1991 |

| Extended definition television systems</a >

AB Lippman, EH Adelson, WJ Butera

US Patent 5,003,377, 1991

|

5</a > | 1991 |

| Junction detection and classification</a >

WT Freeman, EH Adelson

INVESTIGATIVE OPHTHALMOLOGY & VISUAL SCIENCE 32 (4),

1279-1279, 1991 |

8</a > | 1991 |

| RELATIONSHIP BETWEEN GRADIENT, SPATIOTEMPORAL ENERGY, AND REGRESSION-MODELS FOR MOTION PERCEPTION</a > EP Simoncelli, EH Adelson

Investigative Ophthalmology & Visual Science 32 (4),

893-893, 1991 |

13</a > | 1991 |

| A DIFFERENT AFTEREFFECT OF MOTION-ALTERING PERCEIVED DIRECTION OF GRATINGS AND PLAIDS</a > ACO LEW, EY Song, SR FRIEDMANHILL, EH Adelson, JM Wolfe

INVESTIGATIVE OPHTHALMOLOGY & VISUAL SCIENCE 32 (4),

827-827, 1991 |

2</a > | 1991 |

| ELEMENTARY MEASUREMENTS IN VISION</a >

EH ADELSON, JR BERGEN

INVESTIGATIVE OPHTHALMOLOGY & VISUAL SCIENCE 32 (4),

1024-1024, 1991 |

1991 | |

| Computing optical flow distributions using spatio-temporal filters</a > EP Simoncelli, EH Adelson

Vision and Modeling Group, Media Laboratory, Massachusetts Institute

of …, 1991 |

36</a > | 1991 |

| The plenoptic function and the elements of early vision. Computational Models of Visual Processing</a > EH Adelson, JR Bergen

Int. J. Comput. Vis 20, 1991

|

71</a > | 1991 |

| William T. Freeman Edward H. Adelson y</a >

EH Adelson

|

1991 | |

| Computational models of visual processing</a >

EH Adelson, JR Bergen

MIT Press, Cambridge, Mass, 1991

|

167</a > | 1991 |

| ” The Plenoptic Function and the Elements of Early Vision”, Computational Models of Visual</a > EH ADELSON

Processing, Chap. 1, Edited by M. Landy and JA Movshon, 1991</span >

|

2</a > | 1991 |

| Pyramids for early vision</a >

EH Adelson, E Simoncelli, WT Freeman

Representations of vision, 3-16, 1991

|

3</a > | 1991 |

| A stereoscopic camera employing a single main lens</a >

EH Adelson, JYA Wang

Proceedings. 1991 IEEE Computer Society Conference on Computer

Vision and …, 1991 |

5</a > | 1991 |

| Elements of early vision</a >

EH Adelson

OSA Annual Meeting, ME1, 1990

|

1990 | |

| Extensions of steerable filters</a >

WT Freeman, EH Adelson

OSA Annual Meeting, MT2, 1990

|

1990 | |

| Single-lens stereoscopic camera</a >

JYA Wang, EH Adelson

OSA Annual Meeting, FKK4, 1990

|

1990 | |

| Ordinal aspects of transparent junctions</a >

P Anandan, EH Adelson

OSA Annual Meeting, FDD3, 1990

|

1</a > | 1990 |

| Optical flow distributions: gradient, energy, and regression methods</a > EP Simoncelli, EH Adelson

OSA Annual Meeting, FDD4, 1990

|

2</a > | 1990 |

| System for ascertaining direction of blur in a range-from-defocus camera</a > B Girod, EH Adelson

US Patent 4,965,442, 1990

|

60</a > | 1990 |

| Ordinal characteristics of transparency</a >

EH Adelson, P Anandan

Vision and Modeling Group, Media Laboratory, Massachusetts Institute

of …, 1990 |

168</a > | 1990 |

| Digital signal encoding and decoding apparatus</a >

EH Adelson

US Patent 4,939,515, 1990

|

428</a > | 1990 |

| Non-separable extensions of quadrature mirror filters to multiple dimensions</a > EP Simoncelli, EH Adelson

Proceedings of the IEEE 78 (4), 652-664, 1990</span >

|

196</a > | 1990 |

| Subband image coding with hexagonal quadrature mirror filters</a >

EP Simoncelli, EH Adelson

Picture Coding Symposium, 1990

|

9</a > | 1990 |

| Subband image coding with three-tap pyramids</a >

EH Adelson, EP Simoncelli

Picture Coding Symposium, 1-3, 1990

|

48</a > | 1990 |

| Lightness judgements and perceptual organization</a >

EH Adelson

Investigative Opthalmology and Visual Science Supplement, 1304, 1990</span >

|

6</a > | 1990 |

| Subband transforms</a >

EP Simoncelli, EH Adelson

Subband image coding, 143-192, 1990

|

172</a > | 1990 |

| Pyramids for vision and image processing</a >

EH Adelson

PERCEPTION 19 (4), 325-325, 1990

|

1990 | |

| Steerable filters for early vision, image analysis, and wavelet decomposition</a > WT Freeman, EH Adelson

Proceedings Third International Conference on Computer Vision,

406,407,408 …, 1990 |

98</a > | 1990 |

| The perceptual buildup of three-dimensional structure from motion</a > EC Hildreth, NM Grzywacz, EH Adelson, VK Inada

Perception & Psychophysics 48, 19-36, 1990</span >

|

84</a > | 1990 |

| Bildbehandlingsssystem.</a >

CR Carlson, EH Adelson, CH Anderson

|

1989 | |

| A compatible high-definition television system using the noise-margin method of hiding enhancement information</a > WF Schreiber, EH Adelson, AB Lippman, G Rongshu, P Monta, A Popat,

… SMPTE journal 98 (12), 873-879, 1989

|

17</a > | 1989 |

| Nonseparable QMF pyramids</a >

EP Simoncelli, EH Adelson

Visual Communications and Image Processing IV 1199, 1242-1246, 1989</span >

|

9</a > | 1989 |

| Nonlinear model of cat striate physiology</a >

DJ Heeger, EH Adelson

OSA Annual Meeting, TUT2, 1989

|

6</a > | 1989 |

| Steerable filters for image analysis</a >

WT Freeman, EH Adelson, EP Simoncelli

OSA Annual Meeting, MJJ4, 1989

|

20</a > | 1989 |

| Analyzing shading and reflectance</a >

EH Adelson, AP Pentland

OSA Annual Meeting, MJJ2, 1989

|

1</a > | 1989 |

| Hexagonal qmf pyramids</a >

EH Adelson, EP Simoncelli

Applied Vision, WB2, 1989

|

2</a > | 1989 |

| Steerable filters</a >

WT Freeman, EH Adelson

Image Understanding and Machine Vision, WB2, 1989</span >

|

25</a > | 1989 |

| Truncated subband coding of images</a >

EH Adelson, EPM Simoncelli

US Patent 4,817,182, 1989

|

97</a > | 1989 |

| WHITAKER COLLEGE</a >

CM No, EC Hildreth, NM Grzywacz, EH Adelson, VK Inada

|

1989 | |

| Early vision and texture perception</a >

JR Bergen, EH Adelson

Nature 333 (6171), 363-364, 1988

|

476</a > | 1988 |

| Single-channel backward-compatible EDTV systems</a >

AB Lippmanny, AN Netravali, EH Adelson, WR Neuman, WF Schreiber

Television Technology in Transition: 22nd Annual SMPTE Television

Conference …, 1988 |

10</a > | 1988 |

| Channel-compatible 6-MHz HDTV distribution systems</a >

WF Schreiber, AB Lippman, AN Netravali, EH Adelson, DH Staelin

Television Technology in Transition: 22nd Annual SMPTE Television

Conference …, 1988 |

29</a > | 1988 |

| The temporal integration of 3-D structure from motion: a computational and psychophysical study</a > NM Grzywacz, EC Hildreth, VK Inada, EH Adelson

Organization of neural networks, 239-259, 1988</span >

|

11</a > | 1988 |

| Efficient image coding with QMF pyramids</a >

E Simoncelli, EH Adelson, R Hingorani

OSA Annual Meeting, WL7, 1987

|

1987 | |

| QMF pyramids: a new class of orthogonal pyramid transform</a >

EH Adelson, E Simoncelli

OSA Annual Meeting, WL6, 1987

|

1987 | |

| Visual mechanisms and visual illusions</a >

EH Adelson

OSA Annual Meeting, WB1, 1987

|

1987 | |

| Hierarchical, computationally efficient motion estimation algorithm</a > JR Bergen, EH Adelson

OSA Annual Meeting, MY5, 1987

|

81</a > | 1987 |

| Orthogonal Pyramid Transforms For Image Coding.</a >

EH Adelson, E Simoncelli, R Hingorani

Visual Communications and image processing II 845, 50-58, 1987</span >

|

540</a > | 1987 |

| Method for compensating for void-defects in images</a >

PJ Burt, EH Adelson

US Patent 4,698,843, 1987

|

26</a > | 1987 |

| Depth-of-focus imaging process method</a >

EH Adelson

US Patent 4,661,986, 1987

|

219</a > | 1987 |

| The Laplacian pyramid as a compact image code</a >

PJ Burt, EH Adelson

Readings in computer vision, 671-679, 1987</span >

|

9937</a > | 1987 |

| Visual texture segmentation based on energy measures</a >

JR Bergen, EH Adelson

OSA Annual Meeting, THN7, 1986

|

51</a > | 1986 |

| Experimental brain research supplementum ii: pattern recognition mechanisms</a > JA Movshon, EH Adelson, MS Gizzi, WT Newsome

|

8</a > | 1986 |

| The perceptual buildup of three-dimensional structure from motion</a > VK Inada, EC Hildreth, NM Grzywacz, EH Adelson

Invest. Ophthal. Visual Sci. Suppl 27, 142, 1986</span >

|

7</a > | 1986 |

| The extraction of spatio-temporal energy in human and machine vision</a > EH Adelson

IEEE Workshop on Motion: Representation and analysis, 151-155, 1986</span >

|

217</a > | 1986 |

| Merging images through pattern decomposition</a >

PJ Burt, EH Adelson

Applications of digital image processing VIII 575, 173-181, 1985</span >

|

243</a > | 1985 |

| Manipulating image information with pyramids</a >

EH Adelson, PJ Burt

OSA Annual Meeting, THX2, 1985

|

1985 | |

| Mechanisms for the detection of flicker and motion</a >

JR Bergen, EH Adelson

OSA Annual Meeting, FQ5, 1985

|

1</a > | 1985 |

| Pyramid-based computer graphics</a >

JM Ogden, EH Adelson, JR Bergen, PJ Burt

RCA engineer 30 (5), 4-15, 1985

|

222</a > | 1985 |

| System for coring an image-representing signal</a >

CR Carlson, EH Adelson, CH Anderson

US Patent 4,523,230, 1985

|

89</a > | 1985 |

| Improved system for coring an image-representing signal</a >

CR Carlson, EH Adelson, CH Anderson

|

1</a > | 1985 |

| Spatiotemporal energy models for the perception of motion</a >

EH Adelson, JR Bergen

Josa a 2 (2), 284-299, 1985

|

4599</a > | 1985 |

| The analysis of visual moving patterns</a >

JA Movshon, EH Adelson, MS Gizzi, WT Newsome

Pattern recognition mechanisms, 117-151, 1985</span >

|

60</a > | 1985 |

| Pattern recognition mechanisms</a >

JA Movshon, EH Adelson, MS Gizzi, WT Newsome, C Chagas, R Gattas,

… |

158</a > | 1985 |

| The analysis of moving visual patterns</a >

JA Movshon

Experimental Brain Research, 117-151, 1985</span >

|

1175</a > | 1985 |

| Pyramid methods in image processing</a >

EH Adelson, CH Anderson, JR Bergen, PJ Burt, JM Ogden

RCA engineer 29 (6), 33-41, 1984

|

1783</a > | 1984 |

| 75 Inventor: Jerome M. Shapiro, Philadelphia, Pa.</a >

EH Adelson, E Simoncelli, RH SPIE

Research & Development 28 (2), 1984

|

1984 | |

| Multi-resolution splining using A pyramid image representation</a >

EH Adelson, PJ Burt

Applications of Digital Image Processing VI 432, 204-210, 1984</span >

|

3</a > | 1984 |

| Motion channels based on spatiotemporal energy</a >

E Adelson

Investigative Ophthalmology and Visual Science 25, 1984</span >

|

16</a > | 1984 |

| Chromokinetic effect: a surprising interaction of color, luminance, and motion (A)</a > JR Bergen, EH Adelson

Journal of the Optical Society of America A 1, 1284, 1984</span >

|

1984 | |

| The perception of coherent motion in two-dimensional patterns</a >

EH Adelson, JA Movshon

ACM SIGGRAPH Computer Graphics 18 (1), 23-23, 1984</span >

|

35</a > | 1984 |

| Binocular disparity and the computation of two-dimensional motion (A)</a > EH Adelson, JA Movshon

Journal of the Optical Society of America A 1, 1266, 1984</span >

|

59</a > | 1984 |

| A multiresolution spline with application to image mosaics</a >

PJ Burt, EH Adelson

ACM Transactions on Graphics (TOG) 2 (4), 217-236, 1983</span >

|

1725</a > | 1983 |

| What is iconic storage good for?</a >

EH Adelson

Behavioral and Brain Sciences 6 (1), 11-12, 1983</span >

|

12</a > | 1983 |

| In C. Chagas, R. Gattas, & DG Gross</a >

A Movshon, EH Adelson, MS Gizzi, WT Newsome

Pattern recognition mechanisms, 1983

|

3</a > | 1983 |

| Spatiotemporal energy models for the perception of motion (A)</a >

EH Adelson, JR Bergen

Journal of the Optical Society of America (1917-1983) 73, 1861, 1983</span >

|

11</a > | 1983 |

| Phenomenal coherence of moving visual patterns</a >

EH Adelson, JA Movshon

Nature 300 (5892), 523-525, 1982

|

1447</a > | 1982 |

| Image encoding with the Laplacian pyramid (A)</a >

EH Adelson, PJ Burt

Journal of the Optical Society of America (1917-1983) 72, 1803, 1982</span >

|

3</a > | 1982 |

| Phenomenal coherence of Barbas, H.(1988)</a >

EH Adelson, JA Movshon

Anatomic organization of basoventral and medio, 1982</span >

|

5</a > | 1982 |

| Some new illusions and some old ones analyzed in terms of their Fourier components</a > EH Adelson

Investigative Ophthalmology & Visual Science 22, 144, 1982</span >

|

43</a > | 1982 |

| The delayed rod afterimage</a >

EH Adelson

Vision Research 22 (10), 1313-1328, 1982

|

27</a > | 1982 |

| Saturation and adaptation in the rod system</a >

EH Adelson

Vision research 22 (10), 1299-1312, 1982

|

213</a > | 1982 |

| Nonlinear scale for plotting color-matching functions</a >

EH Adelson

JOSA 71 (2), 201-203, 1981

|

1981 | |

| Image data compression with the Laplacian pyramid</a >

PJ Burt, EH Adelson

Proc. IEEE Conf. Pattern Recognition and Image Processing, 1981</span >

|

3</a > | 1981 |

| Phenomenal coherence of moving gratings (A)</a >

EH Adelson, JA Movshon

Journal of the Optical Society of America (1917-1983) 70, 1605, 1980</span >

|

7</a > | 1980 |

| Bleaches as equivalent backgrounds and equivalent filters (A)</a >

EH Adelson

Journal of the Optical Society of America (1917-1983) 70, 1616, 1980</span >

|

3</a > | 1980 |

| Image data compression with the Laplacian pyramid</a >

EH Adelson, PJ Burt

University of Maryland, 1980

|

96</a > | 1980 |

| The psychophysics of iconic storage.</a >

EH Adelson, J Jonides

Journal of Experimental Psychology: Human Perception and Performance

6 (3), 486, 1980 |

96</a > | 1980 |

| Directional movement selectivity in cortical complex cells</a >

JA Movshon, ET Davis, EH Adelson

Soc. Neurosci. Abs 6, 670, 1980

|

18</a > | 1980 |

| Visual persistence without the rods.</a >

EH Adelson

Perception & Psychophysics, 1979

|

42</a > | 1979 |

| The response of the rod system to bright flashes of light</a >

EH Adelson

University of Michigan, 1979

|

1</a > | 1979 |

| Mathematics of afterimage discrimination (A)</a >

EH Adelson

Journal of the Optical Society of America (1917-1983) 69, 1432, 1979</span >

|

1</a > | 1979 |

| Iconic storage: The role of rods</a >

EH Adelson

Science 201 (4355), 544-546, 1978

|

46</a > | 1978 |

| Decay of rod signals following bright flashes (A)</a >

EH Adelson

Journal of the Optical Society of America (1917-1983) 67, 1427, 1977</span >

|

5</a > | 1977 |

| KEY TO INDEX USE</a >

JB Abshire, N Acquista, J See Reader, EH Adelson, J Agostinelli, …

Transformation 50, 487, 1739

|

1739 | |

| 24 Lightness Perception and Lightness Illusions</a >

EH Adelson

|

738</a > | 24 |

| See, Hear, and Feel: Smart Sensory Fusion for Robotic Manipulation (Supplementary Materials)</a > H Li, Y Zhang, J Zhu, S Wang, MA Lee, H Xu, E Adelson, L Fei-Fei, R

Gao, … |

||

| Swingbot: Learning physical features from in-hand tactile exploration for dynamic swing-up manipulation. In 2020 IEEE</a > C Wang, S Wang, B Romero, F Veiga, E Adelson

RSJ International Conference on Intelligent Robots and Systems

(IROS), 5633-5640, 0 |

5</a > | |

| ” Department of Brain and Cognitive Sciences* Department of Electrical Engineering and Computer Science</a > EH Adelson, JYA Wang, SA Niyogº

|

||

| Newsome. WT (1985). The analysis of moving visual patterns</a >

JA Movshon, EH Adelson, MS Gizzi

Stud)’group on pattern recognition mechanisms, 117-1511, 0</span >

|

7</a > | |

| The Perceptual Buildup of Three-Dimensional Structure</a >

EC Hildreth, NM Grzywacz, EH Adelson, VK Inada

|

||

| On the Appearance of Translucent Edges: Supplementary Material</a >

I Gkioulekas, S Harvard, B Walter, EH Adelson, K Bala, T Zickler

|

||

| The Recognition of Material Properties for Vision and Video Communication MIT2000-02</a > EH Adelson

|

||

| Can you see what you feel? Color and folding properties affect material discrimination of fabrics</a > B Xiao, W Bi, X Jia, H Wei, EH Adelson

|

||

| Research Abstracts-2007</a >

C Liu, WT Freeman, EH Adelson

|

||

| Crisp Boundary Detection Using Pointwise Mutual Information–Supplementary Material</a > P Isola, D Zoran, D Krishnan, EH Adelson

|

||

| ARAI, Y. 531 ARAKI, M. 639 ARASE, M. 521 ARAVENA, JL 189 ARCE, GR 187</a > RS ACHARYA, EH ADELSON, M ADJOUADI, G ADORNI, JK AGGARWAL, …

|

||

| 9.601 J/24.949 J Language Acquisition I, Spring 2002</a >

SL Chorover, S Corkin, PH Schiller, GE Schneider, HS Seung, …

|

||

| The MIT Media Laboratory The MIT Media Laboratory Dept. Elec. Eng. and Comp. Sci. Dept. Brain and Cognitive Sciences Massachusetts Institute of Technology Massachusetts …</a > JYA Wang, EH Adelson

|

||

| Aanjaneya, Mridul</a >

M Abe, KT Abou-Moustafa, GD Abowd, WC Abraham, R Abugharbieh, …

|

||

| MIT Media Lab Vision and Modeling Group Technical Report No. 223 Analyzing and Recognizing Walking Figures in XYT</a > SA Niyogi, EH Adelson

|

||

| AI Memo No. 1141 August, 1989 CBIP Memo No. 20</a >

EC Hildreth, NM Grzywacz, EH Adelson, VK Inada

|

||

| Research Abstracts-2006</a >

L Sharan, Y Li, EH Adelson

|

||

| Acoustic Material Recognition</a >

S Cavaco, EH Adelson

|

||

| Estimating Surface Reflectance from Images</a >

R Dror, EH Adelson

|

||

| Recovering Intrinsic Images with Gaussian Conditional Random Fields</a > MF Tappen, WT Freeman, EH Adelson

|

||

| Lightness Perception and Lightness Illusions (Banquet Talk)</a >

EH Adelson

|

||

| Recovering Intrinsic Images</a >

MF Tappen, WT Freeman, EH Adelson

|

Rosenholtz Publications

| ||

|

StatTexNet: Evaluating the Importance of Statistical Parameters for

Pyramid-Based Texture and Peripheral Vision Models

C Koevesdi, V DuTell, A Harrington, M Hamilton, WT Freeman, …

NeuRIPS 2023 Workshop on Gaze Meets ML, 2023

|

2023 | |

| ||

|

Evaluating Peripheral Vision as an Input Transformation to Understand

Object Detection Model Behavior

A Harrington, V DuTell, M Hamilton, A Tewari, S Stent, WT Freeman, …

NeuRIPS 2023 Workshop on Gaze Meets ML, 2023

|

2023 | |

| ||

|

Does your old clutter measure spark joy?

R Rosenholtz

Journal of Vision 23 (9), 5813-5813, 2023

|

2023 | |

| ||

|

Evaluating Pyramid-Based Image Statistics Using Contrastive Learning

V DuTell, W Freeman, R Rosenholtz

Journal of Vision 23 (9), 5744-5744, 2023

|

2023 | |

| ||

|

Peripheral Vision: A Critical Component of Many Visual Tasks

R Rosenholtz

Oxford Research Encyclopedia of Psychology, 2023

|

2023 | |

| ||

|

Efficient dataflow modeling of peripheral encoding in the human visual

system

R Brown, V Dutell, B Walter, R Rosenholtz, P Shirley, M McGuire, …

ACM Transactions on Applied Perceptions 20 (1), 1-22, 2023

|

6 | 2023 |

| ||

|

Efficient Dataflow Modeling of Peripheral Encoding

R Brown, V Dutell, B Walter, R Rosenholtz, P Shirley, M McGuire, …

Journal of Vision 22 (14), 4378-4378, 2022

|

2022 | |

| ||

|

Exploring the perceptual straightness of adversarially robust and

biologically-inspired visual representations

A Harrington, V DuTell, A Tewari, M Hamilton, S Stent, R Rosenholtz, …

SVRHM 2022 Workshop@ NeurIPS, 2022

|

2022 | |

| ||

|

Peripheral vision in real-world tasks: A systematic review

C Vater, B Wolfe, R Rosenholtz

Psychonomic bulletin & review 29 (5), 1531-1557, 2022

|

29 | 2022 |

| ||

|

Exploring perceptual straightness in learned visual representations

A Harrington, V DuTell, A Tewari, M Hamilton, S Stent, R Rosenholtz, …

The Eleventh International Conference on Learning Representations, 2022

|

1 | 2022 |

| ||

|

Peripheral Vision, Models of

R Rosenholtz, B Wolfe

Encyclopedia of Computational Neuroscience, 2722-2725, 2022

|

2022 | |

| ||

|

Toward a theory of visual information acquisition in driving

B Wolfe, BD Sawyer, R Rosenholtz

Human factors 64 (4), 694-713, 2022

|

45 | 2022 |

| ||

|

Effects of temporal and spatiotemporal cues on detection of dynamic

road hazards

B Wolfe, A Kosovicheva, S Stent, R Rosenholtz

Cognitive research: principles and implications 6, 1-15, 2021

|

7 | 2021 |

| ||

|

” Challenges to pooling models of crowding: Implications for visual

mechanisms”: Correction.

R Rosenholtz, D Yu, S Keshvari

Assn for Research in Vision & Ophthalmology (ARVO), 2021

|

2021 | |

| ||

|

The role of crowding in mental maze solving

Y Semizer, D Yu, R Rosenholtz

Journal of Vision 21 (9), 2896-2896, 2021

|

2021 | |

| ||

|

Attentional Cueing in the World: Temporal and Spatiotemporal Cues for

Road Hazards

B Wolfe, A Kosovicheva, S Stent, R Rosenholtz

Journal of Vision 21 (9), 2218-2218, 2021

|

1 | 2021 |

| ||

|

Corrections to: Challenges to pooling models of crowding

R Rosenholtz, D Yu, S Keshvari

|

2021 | |

| ||

|

Superior parietal lobule: a role in relative localization of multiple

different elements

A Vialatte, Y Yeshurun, AZ Khan, R Rosenholtz, L Pisella

Cerebral Cortex 31 (1), 658-671, 2021

|

8 | 2021 |

| ||

|

The Role of Peripheral Vision and Attention in Change Blindness

ME Smith, D El-Shaarawi, Y Ma, E Wilson, L Salee, R Rosenholtz, …

Journal of Vision 20 (11), 1538-1538, 2020

|

1 | 2020 |

| ||

|

Understanding dynamic scenes: How driving can teach us about scene

perception

B Wolfe, R Rosenholtz

Journal of Vision 20 (11), 145-145, 2020

|

1 | 2020 |

| ||

|

Texture Tiling Model

R Rosenholtz

|

2020 | |

| ||

|

Demystifying visual awareness: Peripheral encoding plus limited

decision complexity resolve the paradox of rich visual experience and

curious perceptual failures

R Rosenholtz

Attention, Perception, & Psychophysics 82 (3), 901-925, 2020

|

23 | 2020 |

| ||

|

Rapid holistic perception and evasion of road hazards.

B Wolfe, B Seppelt, B Mehler, B Reimer, R Rosenholtz

Journal of experimental psychology: general 149 (3), 490, 2020

|

53 | 2020 |

| ||

|

Detection of brake lights while distracted: Separating peripheral

vision from cognitive load

B Wolfe, BD Sawyer, A Kosovicheva, B Reimer, R Rosenholtz

Attention, Perception, & Psychophysics 81, 2798-2813, 2019

|

24 | 2019 |

| ||

|

The occurrence of illusory conjunctions correlates with the spatial

noise in peripheral vision

YA Markov, LG Dulyan, R Rosenholtz, IS Utochkin

Journal of Vision 19 (10), 66a-66a, 2019

|

2019 | |

| ||

|

Perceptual factors in mental maze solving

D Yu, Q Wan, B Balas, R Rosenholtz

Journal of Vision 19 (10), 68b-68b, 2019

|

2019 | |

| ||

|

Why Uber Drivers Scare You: Detecting Road Hazards With Peripheral

Vision

BA Wolfe, R Rosenholtz

Journal of Vision 19 (10), 162c-162c, 2019

|

1 | 2019 |

| ||

|

Challenges to pooling models of crowding: Implications for visual

mechanisms

R Rosenholtz, D Yu, S Keshvari

Journal of vision 19 (7), 15-15, 2019

|

51 | 2019 |

| ||

|

Beyond Bouma’s window: How to explain global aspects of crowding?

A Doerig, A Bornet, R Rosenholtz, G Francis, AM Clarke, MH Herzog

PLoS computational biology 15 (5), e1006580, 2019

|

40 | 2019 |

| ||

|

Modern vision science for designers: Making designs clear at a

glance

R Rosenholtz, D Yu

Extended Abstracts of the 2019 CHI Conference on Human Factors in

Computing …, 2019

|

2 | 2019 |

| ||

|

Predicting road scenes from brief views of driving video

B Wolfe, L Fridman, A Kosovicheva, B Seppelt, B Mehler, B Reimer, …

Journal of vision 19 (5), 8-8, 2019

|

16 | 2019 |

| ||

|

Example outputs of the Texture Tiling Model (” mongrels”)

R Rosenholtz, D Yu, S Keshvari

|

2019 | |

| ||

|

Human Vision at a Glance (Invited Talk)

R Rosenholtz, D Yu

14th International Conference on Spatial Information Theory (COSIT

2019), 2019

|

2019 | |

| ||

|

Web pages: What can you see in a single fixation?

A Jahanian, S Keshvari, R Rosenholtz

Cognitive Research: Principles and Implications 3, 1-15, 2018

|

17 | 2018 |

| ||

|

Context mitigates crowding: Peripheral object recognition in real-world

images

MWA Wijntjes, R Rosenholtz

Cognition 180, 158-164, 2018

|

11 | 2018 |

| ||

|

Similarity effects in peripheral vision: improved representation or

cuing?

D Yu, R Rosenholtz

Journal of Vision 18 (10), 20-20, 2018

|

1 | 2018 |

| ||

|

Was that a moose on the road? Gist-like perception of emerging driving

hazards

B Wolfe, R Rosenholtz